The rapid evolution of artificial intelligence is transforming nearly every sector. But have you considered the power of Generative Adversarial Networks (GANs), and how they're revolutionizing industries through the creation of incredibly realistic synthetic images? Recent studies show that the global market for AI-generated content is projected to reach billions of dollars within the next few years, demonstrating the incredible potential of this technology.

Market & Trends: The Ascent of AI-Generated Imagery

The market for AI-generated content, including images created by GANs, is experiencing exponential growth. A recent report by ResearchAndMarkets.com projects the global AI-generated content market to reach $XX billion by 20XX, representing a compound annual growth rate (CAGR) of over XX%. This expansion is driven by a confluence of factors, including:

- Increased processing power from advancements in hardware, like GPUs.

- The growing need for dynamic, customized visual content across various digital platforms.

- The accessibility of user-friendly AI tools, even for non-technical users.

Comparison of AI Image Generation Techniques

| Feature | GANs | Other Image Generation Methods |

|---|---|---|

| Realism | High | Variable |

| Control | Fine-grained | Can be limited |

| Training Data | Extensive | Varies |

| Computational Cost | High | Moderate |

| Popular Uses | Content creation, Data augmentation | Image editing, Simple graphics |

The Actionable Framework: Decoding GANs and their Functionality

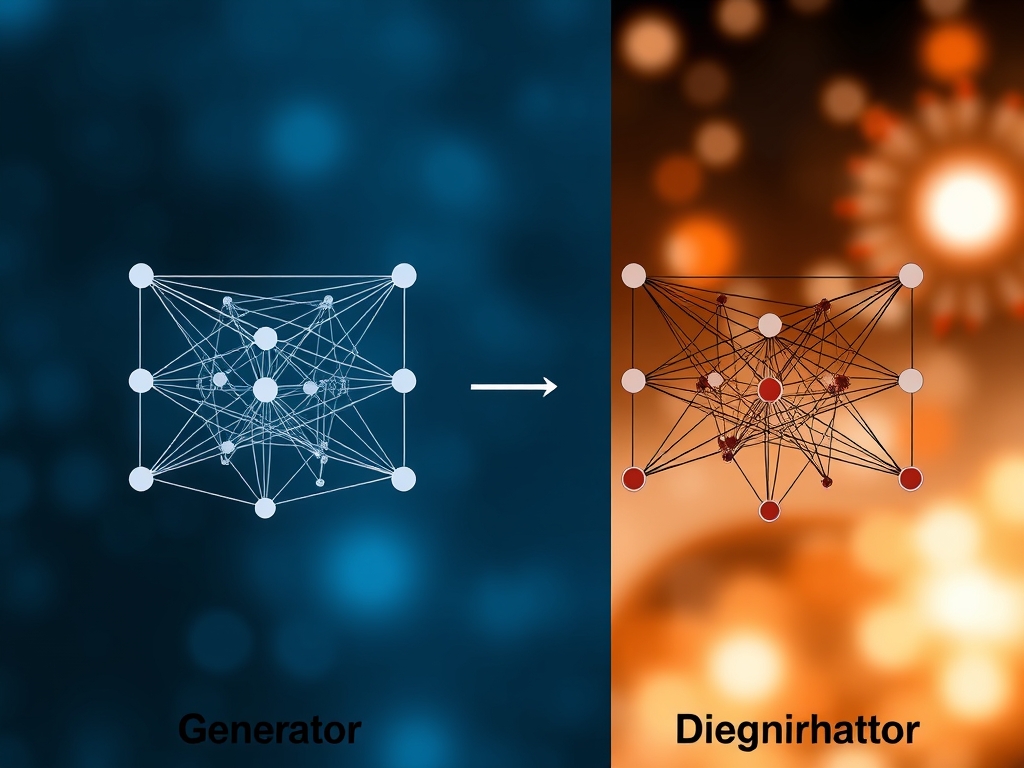

GANs operate on a fascinating principle: they pit two neural networks against each other in a game of adversarial learning. One network, the generator, creates new images. The other, the discriminator, tries to distinguish between real images and the ones generated by the generator. Through this iterative process, the generator learns to produce increasingly realistic images that can deceive the discriminator.

Here's a simplified breakdown:

Step 1: Data Preparation

The initial stage involves gathering a massive dataset of images relevant to the desired output. This dataset serves as the training data for both the generator and the discriminator. For instance, if you want to generate realistic faces, the dataset would consist of thousands of facial images.

Step 2: Training the Generator

The generator begins with random noise and, through the training process, learns to transform this noise into images that start to resemble the training data. The goal is to "fool" the discriminator.

Step 3: Training the Discriminator

The discriminator analyzes the images it receives, both real ones from the dataset and fake ones produced by the generator. Its objective is to correctly identify the real images and flag the fakes.

Step 4: The Adversarial Loop

The generator and discriminator are trained iteratively. The generator improves its ability to create realistic images, and the discriminator sharpens its ability to detect fakes. This constant feedback loop drives the GAN toward generating more convincing outputs.

Step 5: Iteration and Refinement

The process continues for thousands, sometimes millions, of iterations. The model's performance is monitored, and parameters are adjusted to optimize the quality of the generated images.

Analytical Deep Dive: The Impact and Applications

The impact of GANs is far-reaching. From enhancing medical imaging to revolutionizing the video game industry, the practical applications are extraordinary.

- Data Augmentation: GANs are used to create synthetic data, effectively increasing the size and diversity of datasets used to train other AI models. This improves the performance and robustness of these models, especially in fields like medical imaging and computer vision.

- Content Creation: In digital marketing and advertising, GANs can generate product images, promotional materials, and even videos, significantly reducing production costs and timelines.

- Creative Arts: Artists and designers are using GANs to explore new forms of creative expression, generate unique textures and patterns, and create entirely novel visual styles.

Strategic Alternatives & Adaptations: Varying Approaches

Not all GANs are created equal. Different GAN architectures are suited for different tasks. Understanding these differences can allow you to select the best type of GAN for your specific needs. Here's a brief overview:

- Vanilla GANs: The original GAN architecture. Can be unstable and produce less-than-optimal results.

- DCGANs (Deep Convolutional GANs): Uses convolutional layers, well suited for image generation, and often produces better results than the vanilla version.

- StyleGAN: A more advanced architecture that allows fine-grained control over the style of generated images.

For Beginner Implementation, it's best to start with accessible, pre-trained models. Numerous online platforms provide easy-to-use interfaces, requiring minimal technical skill. For Intermediate Optimization, focus on understanding and tuning hyperparameters. Finally, for Expert Scaling, consider custom training on specialized datasets, and deploying models using cloud computing for efficiency.

Risk Mitigation: Common Errors to Avoid

Like any cutting-edge technology, using GANs is not without its pitfalls. Here are some of the most common issues and how to mitigate them:

- Mode Collapse: When the generator starts to produce the same type of output repeatedly. The solution is to introduce more diversity into the training data and adjust the GAN's architecture.

- Vanishing Gradients: Problems with the gradients during training that cause the learning to stop. Experiment with different optimizers, learning rates, and GAN architectures.

- Overfitting: Overfitting to the training dataset, leading to poor generalization. Using data augmentation, regularization techniques, and increasing the diversity of the training data can prevent it.

Performance Optimization & Best Practices: Elevating Results

To unlock maximum value from GANs, follow these best practices:

- Experiment with Architectures: The design of a GAN is important. Try different architectures (DCGAN, StyleGAN) to find the best fit for your needs.

- Monitor Training Progress: Keep track of the loss functions of both the generator and discriminator. This will reveal problems during training.

- Fine-Tune Hyperparameters: Hyperparameter tuning (learning rate, batch size, etc.) significantly impacts results.

- Leverage Pre-trained Models: Use pre-trained models and fine-tune them on your specific dataset.

Scalability & Longevity Strategy

For sustained success with GANs, the following elements are critical:

- Continuous Learning: Keep up-to-date with new research and advances in GAN architectures.

- Optimize Hardware: High-quality GPUs and ample memory are essential for fast training.

- Automate Pipelines: Automate data preparation, training, and deployment for scalable workflows.

Conclusion

Generative Adversarial Networks (GANs) represent a transformative technology with applications that span industries. By mastering these powerful tools and adopting the described best practices, you can unlock incredible possibilities for content creation, data augmentation, and creative innovation. The future of image generation is here, and it's powered by AI.

Frequently Asked Questions

- What are some real-world examples of GAN usage? GANs are used in many fields. Examples include creating new designs in fashion, making photorealistic synthetic images for advertising, improving medical imaging by generating additional synthetic datasets, and enhancing video games by generating assets.

- Are GANs easy to use? While the underlying concepts are complex, there are numerous tools and platforms that provide user-friendly interfaces, making it easier to leverage GANs without extensive coding knowledge.

- What are the limitations of GANs? GANs can be computationally expensive to train and can suffer from issues like mode collapse and vanishing gradients. The generated images may still have imperfections.

- How can I learn more about GANs? There are many online courses, tutorials, and research papers available. Start with the basics and gradually delve into the more complex aspects of these powerful generative models.