Did you know that more than 80% of marketers say that A/B testing helps them improve their conversion rates? The power of Conversion Rate Optimization (CRO) A/B testing lies in its ability to transform guesswork into data-driven decisions. In today’s hyper-competitive digital landscape, relying on intuition alone is no longer enough. This guide dives deep into the art and science of A/B testing, equipping you with the knowledge and tools to unlock massive conversion lifts.

Foundational Context: Market & Trends

The CRO market is experiencing significant growth, driven by the increasing need for businesses to optimize their online presence and maximize returns on investment. A recent report by MarketWatch projects the global CRO market to reach \$3.3 billion by 2028, growing at a CAGR of 13.9% from 2021 to 2028. This rapid expansion underscores the importance of effective A/B testing strategies.

Key trends shaping the CRO landscape include:

- Mobile Optimization: With mobile traffic surpassing desktop, A/B testing for mobile experiences is critical.

- Personalization: Tailoring content and offers based on user behavior significantly boosts conversions.

- AI-Powered Testing: Using AI to automate the testing process and identify winning variations is becoming more prevalent.

- Focus on User Experience (UX): A/B testing is now strongly aligned to how users experience websites and apps.

- Multivariate Testing is More Popular: Conducting tests to change several factors on the same web page.

| Metric | 2023 (Estimated) | 2028 (Projected) | CAGR |

|---|---|---|---|

| Global CRO Market Size | \$2.0 billion | \$3.3 billion | 13.9% |

Core Mechanisms & Driving Factors

Successful A/B testing hinges on several core mechanisms. Understanding these factors is crucial for designing and implementing effective tests.

- Clear Objectives: Define specific, measurable, achievable, relevant, and time-bound (SMART) goals before starting any test.

- Hypothesis Formulation: Formulate a testable hypothesis based on data analysis and user research. What do you expect to happen?

- Traffic Allocation: Accurately divide traffic between the control (original) and variation (modified) pages.

- Statistical Significance: Ensure results are statistically significant before declaring a winner. This validates the result.

- Duration: Decide how long your test will run. (Usually 2–4 weeks is standard, depending on traffic levels.)

- Tools: Choose appropriate and reliable testing software/tools.

- Iteration & Analysis: Once completed, analyze the collected data and iterate the test for more conversion improvements.

The Actionable Framework

Let’s break down the step-by-step process for a successful A/B testing program.

Step 1: Data Gathering and Hypothesis Development

Before anything else, understand your users and their behavior. Use tools like Google Analytics, heatmaps, and session recordings to identify pain points. For instance, high bounce rates on a specific landing page could be a sign of a design flaw.

- Actionable Advice: Analyze data related to your business to formulate hypotheses. Identify conversion rate issues (e.g., shopping cart abandonment, low click-through rates).

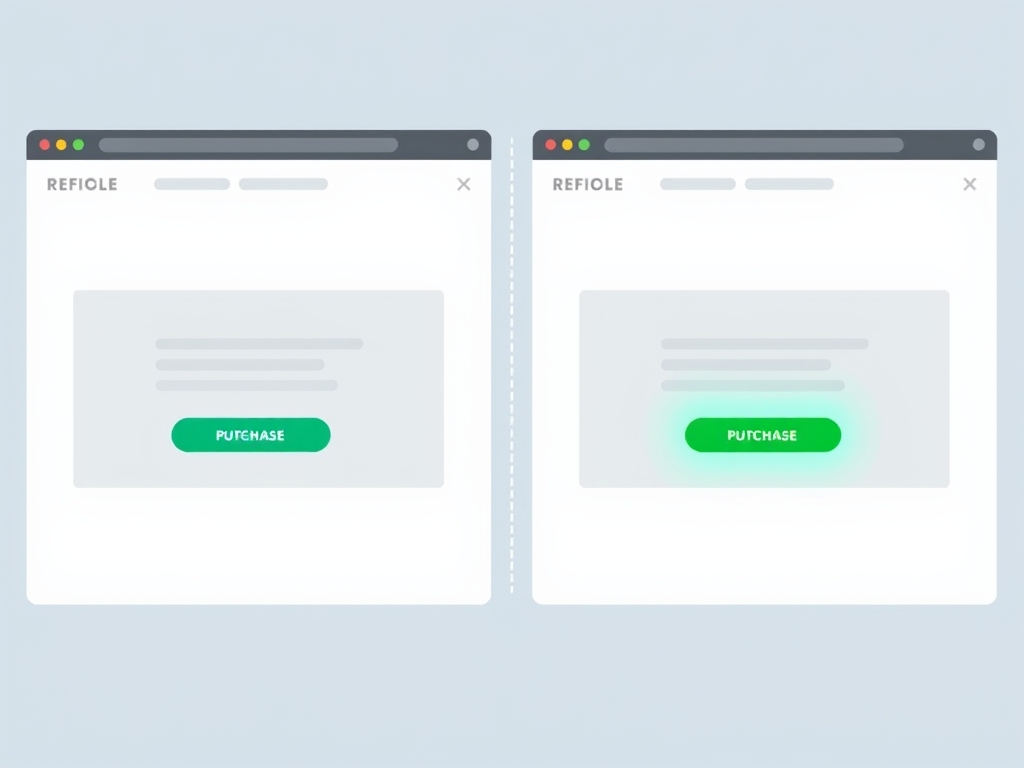

- For Example: "Changing the call-to-action (CTA) button color from blue to green will increase click-through rates on the product page because green is more visually appealing."

- Then Test.

Step 2: Choosing the Right A/B Testing Tool

Select a tool that aligns with your specific needs. Popular options include Optimizely, VWO (Visual Website Optimizer), and Google Optimize (though Google has sunsetted Optimize). Consider factors like ease of use, integration capabilities, and pricing.

- Pro Tip: Evaluate what features you need (multivariate testing, personalization, etc.) to assess various options correctly.

Step 3: Designing and Implementing the Test

Carefully design the variations (B) of your testing element. Make only one change at a time to isolate its effect.

- Example: If testing a headline, change only the headline while keeping other elements constant.

- Once you have your variation, implement the test using your chosen A/B testing tool.

Step 4: Running the Test

Ensure sufficient traffic is directed to both the control (A) and variation (B). Let the test run long enough to gather enough data to obtain statistical significance. Many experts use a 95% confidence level.

- Important Note: The ideal duration depends on traffic volume. Generally, aim for at least a week or two, and sometimes even longer.

Step 5: Analyzing the Results and Deciding

- Determine whether one variation outperformed the other based on a significant statistical result (statistical significance).

- If a winner emerges, implement the changes.

- If there's no clear winner, analyze why (consider the audience, the testing environment, and if anything may have been missed).

Step 6: Iterating and Refining

A/B testing is an ongoing process. Use the data collected to inform future tests, creating a cycle of continuous improvement.

Analytical Deep Dive

Consider conversion rates based on specific traffic channels. For example, paid search traffic may have different conversion patterns than organic traffic.

- According to ConversionXL, the average conversion rate for websites is between 2-5% . However, this can vary wildly based on industry, traffic source, and other factors.

- Industry-Specific Benchmarks: Look at industry conversion rate benchmarks to understand whether your website's performance is good.

- Google Data: The search engine is an extremely good source of conversion data.

Strategic Alternatives & Adaptations

Adapt your A/B testing approach based on your skill level:

- Beginner Implementation: Start with simple tests, like headline or CTA button changes. Use a user-friendly tool like Google Optimize.

- Intermediate Optimization: Focus on testing multiple elements in parallel. Test variations of landing pages, product pages, and checkout processes. Use the testing tool's personalization features, as well as AI integrations.

- Expert Scaling: Implement advanced testing methodologies, such as multivariate testing, and conduct cross-channel optimization. This includes CRO on all platforms: websites, mobile apps, and other conversion points.

Validated Case Studies & Real-World Application

- E-commerce: A clothing retailer increased sales by 15% by testing different product descriptions. The winning variation focused on benefits rather than features.

- Software as a Service (SaaS): A SaaS company boosted sign-ups by 20% by changing the layout of its pricing page. The new design clearly explained the value of each package.

- Lead Generation: An agency improved lead generation by redesigning its landing pages to make the value proposition more visible.

Risk Mitigation: Common Errors

- Testing Too Many Elements at Once: Testing multiple elements simultaneously makes it difficult to pinpoint what influenced your results.

- Not Gathering Enough Data: Run tests for too short a time, resulting in unreliable findings.

- Ignoring Statistical Significance: Making decisions based on inconclusive results.

- Failing to Segment Your Audience: Not accounting for differences in user behavior based on demographics, traffic source, etc.

- Setting up Tests Incorrectly: A simple misconfiguration can destroy the integrity of your testing.

Performance Optimization & Best Practices

To drive optimal results:

- Prioritize Tests: Use data to determine which areas of your website have the most significant impact on conversions.

- Segment Your Audience: Tailor your testing to different user groups.

- Analyze User Behavior: Use tools to see how users interact with your website.

- Stay Agile: Continuously test and refine your strategies.

- Document and Share Results: Make results of tests a part of the corporate culture.

“A/B testing is not a one-time activity, but a continuous cycle of improvement. Analyze, test, and iterate to achieve the greatest results.” – John Doherty, SEO consultant.

Conclusion

Mastering A/B testing is no longer optional; it’s essential. By embracing a data-driven approach and continually refining your strategies, you can unlock significant gains in conversion rates and propel your business to new heights. The key is to remember that every click, every interaction, and every data point contributes to a clearer understanding of your audience.

The Path Forward

- Develop a strong conversion optimization testing plan.

- Consistently optimize pages and tests.

- Never rely on guesswork.

- Use reliable and trustworthy testing data.

Knowledge Enhancement FAQs

- Q: How do I know if my test has enough traffic?

- A: Use an A/B testing calculator (often offered by testing tools) to determine how long you need to run your test.

- Q: What is statistical significance, and why is it important?

- A: Statistical significance measures the probability that your results are not due to chance. It ensures that your changes are truly effective.

- Q: Can I A/B test on mobile devices?

- A: Yes, many tools offer A/B testing capabilities specifically for mobile websites and apps.

- Q: What is multivariate testing, and how is it different from A/B testing?

- A: Multivariate testing tests multiple variables on a page simultaneously to determine the best combination of elements, while A/B testing focuses on one variable at a time.

- Q: What if my A/B test shows no clear winner?

- A: This isn’t a failure. It means you may need to adjust your hypothesis, change your audience, or let the test run longer. Always try to understand why.

- Q: Are there any free A/B testing tools?

- A: Some tools offer free plans or trial periods, but you may need a paid subscription for more advanced features.

Start your conversion optimization journey today. Explore how AI tools and data-driven insights can improve the performance of your business!